Concurrency is not Parallelism

- tags

- #go #golang #concurrency

In my previous post I discussed how the simple rules of the Boids algorithm, applied to independent actors aware only of their local environments, produces beautiful, realistic flocking behaviour. While writing I was reminded of another of my favourite examples of emergent behaviour: Conway’s Game of Life. For fun, I decided to implement it in Go - and found that it demonstrates well that old Go proverb:

Conway’s Game of Life

In 1970, John Conway invented his “Game of Life” (GoL): a simple cellular automaton, from which emerges a curious life-like behaviour, almost as if one was looking through a microscope into a Petri dish.

The GoL universe is a matrix of cells, each of which is either alive (depicted black) or dead (depicted white). Each cell transitions between these states according to the following rules:

- Any live cell with two or three live neighbours survives.

- Any dead cell with three live neighbours becomes a live cell.

- All other live cells die in the next generation. Similarly, all other dead cells stay dead.

A neighbour refers to any of the immediately adjacent cells.

Implementing GoL in Go

When approaching a new problem, always implement a simple solution first. As Donald Knuth famously said:

“The real problem is that programmers have spent far too much time worrying about efficiency in the wrong places and at the wrong times; premature optimization is the root of all evil (or at least most of it) in programming.” - Donald Knuth

With this in mind, let’s implement a super simple GoL algorithm in Go!

Modelling the World Grid

First we need a model of the world:

// World describes the world grid.

type World struct {

Width, Height int

// Grid describes the set of cells in the world and their alive/dead state.

// Note that the first row in the array corresponds to the bottom of the screen,

// and the first element of a row corresponds to the left side of the screen.

Grid [][]bool

}

We can instantiate a World like so:

// NewWorld returns a World of the given dimensions with a randomly initialised grid.

func NewWorld(width, height int) *World {

world := &World{

Width: width,

Height: height,

}

world.Grid = make([][]bool, height)

for y := range world.Grid {

world.Grid[y] = make([]bool, width)

for x := range world.Grid[y] {

world.Grid[y][x] = rand.Float64() < 0.2

}

}

return world

}

Counting Neighbours

In order to implement Conway’s rules, we will need to be able to count the number of alive neighbours to a given cell:

// WrapCoords accepts an (x, y) coordinate and returns a coordinate which is

// within the bounds of the World, using a toroidal wrapping.

func (w *World) WrapCoords(x, y int) (int, int) {

if x < 0 {

x = w.Width - 1

}

if x > w.Width-1 {

x = 0

}

if y < 0 {

y = w.Height - 1

}

if y > w.Height-1 {

y = 0

}

return x, y

}

// CountLiveNeighbours returns the number of live neighbours

// around the given cell position.

func (w *World) CountLiveNeighbours(x, y int) int {

count := 0

for j := -1; j <= 1; j++ {

for i := -1; i <= 1; i++ {

if i == 0 && j == 0 {

continue

}

_x, _y := w.WrapCoords(x+i, y+j)

if w.Grid[_y][_x] {

count++

}

}

}

return count

}

This simple implementation wraps the world grid such that flying off the right hand side brings you back to the left, and flying off the top brings you to the bottom - i.e. a torus:

Conway’s Rules

Now that we can count neighbours, we can implement Conway’s rules:

// Update applies the rules to the world.

func (w *World) Update() {

// Create a deep copy of the world grid

nextGrid := make([][]bool, len(w.Grid))

for y := range w.Grid {

nextGrid[y] = make([]bool, len(w.Grid[y]))

copy(nextGrid[y], w.Grid[y])

}

// Apply Conway's rules

for y := range w.Grid {

for x := range w.Grid[y] {

n := w.CountLiveNeighbours(x, y)

if w.Grid[y][x] {

// Any live cell with fewer than two or more than three live neighbours dies

if n < 2 || n > 3 {

nextGrid[y][x] = false

}

} else {

// Any dead cell with exactly three live neighbours becomes a live cell

if n == 3 {

nextGrid[y][x] = true

}

}

}

}

// Update the world grid

w.Grid = nextGrid

}

Note how we create a copy of the world grid so that we don’t read the updated values of neighbouring cells.

Rendering to the Screen

Finally, it would be great to render the game to the screen. For this I used the excellent pixel library from @faiface. We can create a window and game loop in the same way as described in my previous post:

const (

// WindowWidth is the width of the window in pixels

WindowWidth = 1200

// WindowHeight is the height of the window in pixels

WindowHeight = 800

// WorldWidth is the width of the world in # cells.

WorldWidth = 1200

// WorldHeight is the height of the world in # cells.

WorldHeight = 800

)

func createWindow(title string, width, height float64) (*pixelgl.Window, error) {

window, err := pixelgl.NewWindow(pixelgl.WindowConfig{

Title: title,

Bounds: pixel.R(0, 0, width, height),

VSync: true,

})

if err != nil {

return nil, errors.Wrap(err, "new window failed")

}

window.SetSmooth(true)

return window, nil

}

// Run runs the game.

func Run() {

window, err := createWindow("GoLife", WindowWidth, WindowHeight)

if err != nil {

panic(errors.Wrap(err, "create window failed"))

}

frames := 0

second := time.Tick(time.Second)

world := life.NewWorld(WorldWidth, WorldHeight)

drawer := draw.NewDrawer()

for !window.Closed() {

drawer.DrawFrame(window, world)

world.Update()

window.Update()

frames++

select {

case <-second:

window.SetTitle(fmt.Sprintf("%s | FPS: %d", "GoLife", frames))

frames = 0

default:

}

}

}

func init() {

rand.Seed(time.Now().Unix())

}

Then, we can implement DrawFrame to draw the world grid on the window. We can achieve this by iterating over the world grid and rendering black rectangles at the coordinates of alive cells, applying a scaling factor to convert the grid coordinates to window coordinates:

// DrawFrame draws the world on the window.

func (d *Drawer) DrawFrame(window *pixelgl.Window, world *life.World) error {

window.Clear(colornames.Aliceblue)

d.Batch.Clear()

imd := imdraw.New(nil)

imd.Color = pixel.RGB(0, 0, 0)

scale := pixel.V(

window.Bounds().Max.Y/float64(len(world.Grid)),

window.Bounds().Max.X/float64(len(world.Grid[0])),

)

for y := range world.Grid {

for x := range world.Grid[y] {

if !world.Grid[y][x] {

continue

}

imd.Push(pixel.V(float64(x)*scale.X, float64(y)*scale.Y))

imd.Push(pixel.V(float64(x+1)*scale.X, float64(y+1)*scale.Y))

imd.Rectangle(0)

}

}

imd.Draw(d.Batch)

d.Batch.Draw(window)

return nil

}

Here’s the result with a 1200x800 world grid:

Faster!

Great, so our basic implementation works - but can we make it faster?

Benchmarking

First up, time for a speed test: let’s add some benchmarks. For the purposes of this post, we’ll ignore the rendering code and benchmark the core GoL update logic. If you are unfamiliar with writing benchmarks in the Go, I recommend this excellent post from Dave Cheney.

func benchmarkUpdate(sideLength int, b *testing.B) {

world := life.NewWorld(sideLength, sideLength)

for n := 0; n < b.N; n++ {

world.Update()

}

}

func BenchmarkUpdate30(b *testing.B) { benchmarkUpdate(30, b) }

func BenchmarkUpdate35(b *testing.B) { benchmarkUpdate(35, b) }

func BenchmarkUpdate40(b *testing.B) { benchmarkUpdate(40, b) }

func BenchmarkUpdate45(b *testing.B) { benchmarkUpdate(45, b) }

Here we are measuring the time taken to update different grid sizes. Running on my machine (with a 2m -benchtime to improve statistical significance):

❯ go test -timeout=1h -benchtime=2m -bench=. ./pkg/life/life_test.go

goos: darwin

goarch: amd64

BenchmarkUpdate30-4 2718184 53135 ns/op

BenchmarkUpdate35-4 2132811 69771 ns/op

BenchmarkUpdate40-4 1558998 101462 ns/op

BenchmarkUpdate45-4 1000000 121130 ns/op

BenchmarkUpdate50-4 319368 141794 ns/op

BenchmarkUpdate55-4 226026 162587 ns/op

BenchmarkUpdate60-4 190359 203564 ns/op

BenchmarkUpdate65-4 136521 248646 ns/op

PASS

ok command-line-arguments 788.950s

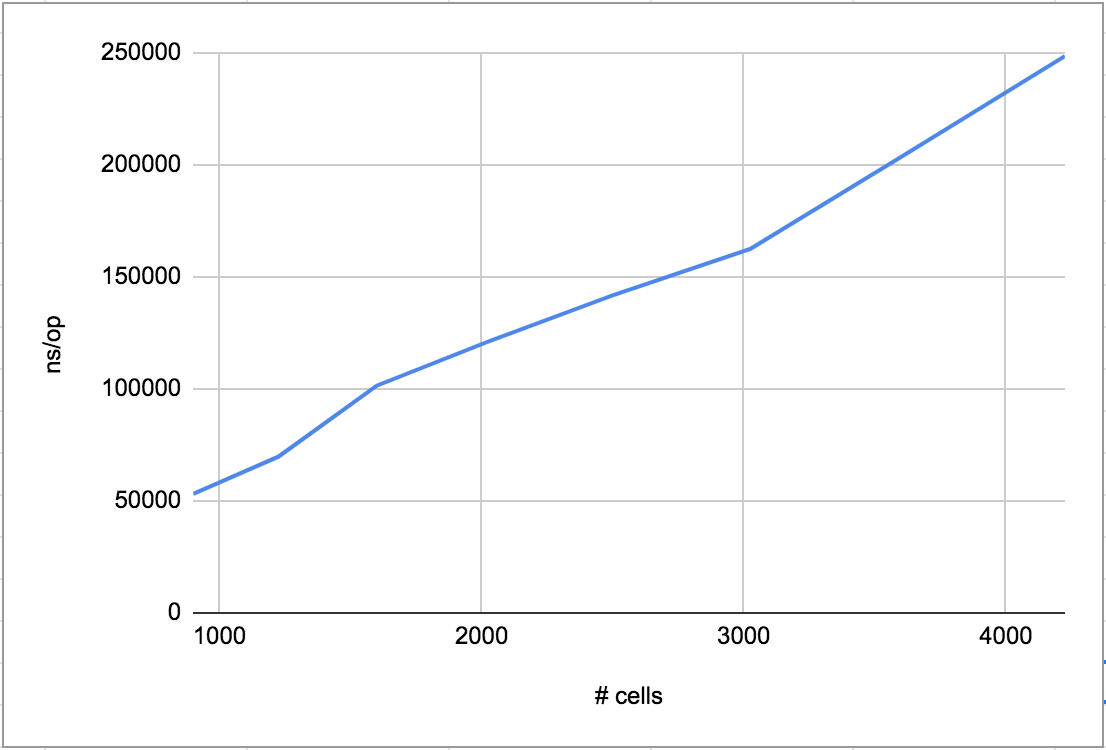

As expected, we can see that runtime grows linearly with the number of cells in the grid (the square of the height of the grid):

In order to compare the program performance as we optimise the code, let’s obtain a benchmark on a large grid of 10000x10000 cells:

# func BenchmarkUpdate10000(b *testing.B) { benchmarkUpdate(10000, b) }

❯ go test -benchtime=1m30s -bench=. ./pkg/life/life_test.go

BenchmarkUpdate10000-4 19 5031633976 ns/op

So, about 5 seconds on my machine to update the whole grid.

Partitioning the Grid

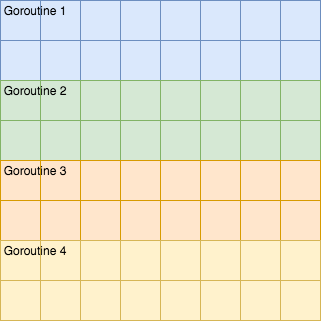

Can we speed up the program by partitioning the grid and computing each section of the grid concurrently (in their own goroutines)?

We can achieve this by executing the outer update loop over a specific range of rows:

// updatePartition applies the rules to a vertically-partitioned portion of the world.

func (w *World) updatePartition(wg *sync.WaitGroup, yStart, yEnd int, nextGrid [][]bool) {

defer wg.Done()

for y := range w.Grid[yStart:yEnd] {

y = y + yStart

for x := range w.Grid[y] {

n := w.CountLiveNeighbours(x, y)

if w.Grid[y][x] {

// Any live cell with fewer than two or more than three live neighbours dies

if n < 2 || n > 3 {

nextGrid[y][x] = false

}

} else {

// Any dead cell with exactly three live neighbours becomes a live cell

if n == 3 {

nextGrid[y][x] = true

}

}

}

}

}

Then we can call updatePartition concurrently for the desired number of partitions (note that the number of partitions must be a factor of the world height):

// Update applies the rules to the world.

func (w *World) Update(partitions int) {

// Create a deep copy of the world grid

nextGrid := make([][]bool, len(w.Grid))

for y := range w.Grid {

nextGrid[y] = make([]bool, len(w.Grid[y]))

copy(nextGrid[y], w.Grid[y])

}

// Apply Conway's rules

var wg sync.WaitGroup

for p := 0; p < partitions; p++ {

wg.Add(1)

go w.updatePartition(&wg, p*w.Height/partitions, (p+1)*w.Height/partitions, nextGrid)

}

wg.Wait()

// Update the world grid

w.Grid = nextGrid

}

As an aside, you may notice that our goroutines may concurrently read from the same cell. This can occur at the boundaries between partitions where a neighbouring cell is found in the adjacent partition. This is in fact not a race condition, since no writes occur on w.Grid; they only occur on the deep copy, nextGrid. Note that if we wanted to remove the synchronisation (achieved with the WaitGroup) that is currently required with each call to Update - that is, to allow goroutines to race ahead to the next generation - then we would instead need to use channels to communicate the state of the partition boundaries:

Don’t communicate by sharing memory, share memory by communicating.

Here are the results of running the 10000x10000 benchmark with 2 partitions on my machine:

❯ go test -benchtime=1m30s -bench=. ./pkg/life/life_test.go

BenchmarkUpdate10000-4 26 4110649931 ns/op

That’s down from ~5s to ~4s, a 20% improvement. We can see further improvement with 4 partitions:

❯ go test -benchtime=1m30s -bench=. ./pkg/life/life_test.go

BenchmarkUpdate10000-4 33 3073360188 ns/op

However, increasing the number of partitions beyond this doesn’t improve the runtime of our program!

❯ go test -benchtime=1m30s -bench=. ./pkg/life/life_test.go

BenchmarkUpdate10000-4 33 3051534928 ns/op

❯ go test -benchtime=1m30s -bench=. ./pkg/life/life_test.go

BenchmarkUpdate10000-4 27 3930115474 ns/op

Concurrency is Not Parallelism

Why doesn’t adding more than 4 goroutines lead to a speed up? There’s a clue in the benchmark output:

BenchmarkUpdate10000-4

That -4 postfix indicates the value of GOMAXPROCS during that run - that is, the number of OS threads available to our goroutines. My machine has 4 virtual cores, and by default (since Go 1.5) GOMAXPROCS matches this value.

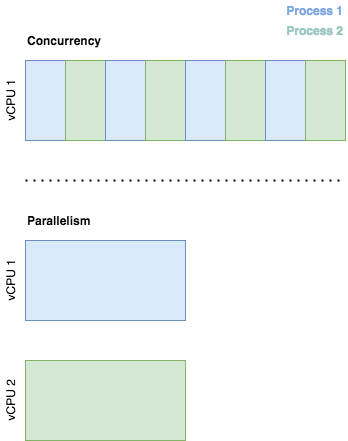

When we split the grid into 4 partitions, the Go runtime was able to schedule the goroutines into separate OS threads, which are executed in parallel across each vCPU. With more partitions, execution of each goroutine continues to be concurrent, but not in fact parallel.

What if N > GOMAXPROCS?

You may have heard goroutines describes as “lightweight threads” before. One of the benefits of this lightweight footprint is supposed to be that you can spawn a lot of them - but if spawning more than GOMAXPROCS goroutines doesn’t speed up our program, what is the point? The answer lies with the Go scheduler and the type of work that our goroutines are performing.

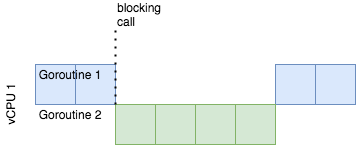

Note that update algorithm for Conway’s GoL is busy. There are no I/O or otherwise blocking calls and the algorithm makes maximal use of CPU time. Imagine instead our goroutines were executing code that made blocking calls. Then, the scheduler could allow other goroutines to use the CPU while the caller is blocked.

So how do you determine whether to add concurrency to your program? It’s important to profile your code to understand where adding concurrency will yield benefits and where it will not.

If you want to play around with this yourself, you can check out the source code. If you’ve got comments, questions or feedback you can hit me up on Twitter ✌️